Oscar Florez-Vargas has just published a paper in the journal eLife as part of his Ph.D. work here in Manchester. The paper is:

Oscar Flórez-Vargas, Andy Brass, George Karystianis, Michael Bramhall, Robert Stevens, Sheena Cruickshank, and Goran Nenadic. Bias in the reporting of sex and age in biomedical research on mouse models. eLife, 5:e13615, 2016.

Earlier in his Ph.D. Oscar did some studies “in depth” into the quality of methods reporting in parasitology experiments in research papers. I reported on this study in a blog “being a credible virtual witness” – the gist of this is that for a research paper to act as a credible witness for a scientific experiment there must be enough of that experiment reported such that it can be reproduced. Oscar found that the overwhelming majority of the papers in his study failed to report the minimal features required for reproducibility. We did another study, led by Michael Bramhall during his Ph.D., with similar findings “quality of methods reporting in animal models of colitis” published in Inflammatory Bowel Diseases.

In both studies, the reporting of experimental method was found to be wanting.

Two of the important factors to report in the experiments in these and other studies are the age and the sex of mice; both factors have significant impact on many aspects of an organism’s biology. The original studies Oscar did were in Depth for many factors in a relatively small area of biology and a smallish number of papers captured by following a systematic review; this time, we wanted to do a broad survey across these two factors. We chose age and sex as they are important factors across many aspects of an animal’s biology and, hence, they influence the outcome of experiments.

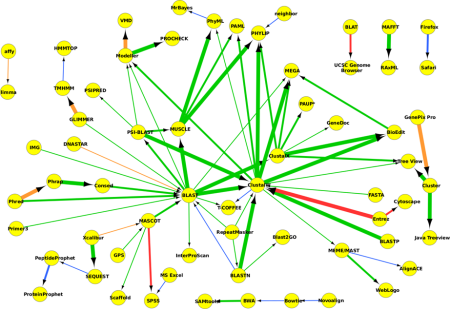

We used text analytics on all papers in the PMC full-text collection that had mouse as the focus of their study; this amounted to 15,311 papers published between 1994 and 2014. I won’t report the details of the study here, but we got good recovery of these two factors and were able to report the following observations:

- The reporting of both sex and age of mice has increased over time, but by 2014 only 50% of papers using mice as the focus of their study reported both the age and sex of those mice.

- There is a distinct bias towards using female mice in studies.

- There are sex biases between six pre-clinical research areas; there’s a strong bias to male mice in cardiovascular disease studies and a bias towards female mice in studies of infectious disease.

- The reporting of age and sex have steadily increased; this change started before the US Institute of Medicine report in 2001 or the ARRIVE guidelines that called for better reporting of method.

- There were differences in the reporting of sex in the research areas we tested (cardiovascular diseases; cancer; diabetes mellitus; lung diseases; infectious diseases; and neurological disorders). Diabetes had the best reporting of sex and cancer the worst. Age was also reported the least well in cancer studies. Taking both sex and age into account, neurological disorders had the best reporting.

- We also looked at reporting of sex in four sub-groups of study type (genetics, immunology, physiopathology and therapy): male mice were preferred in genetics studies and female mice preferred in immunological studies.

Age and sex of mice used is important in experiments as it is an important factor in the biology being studied. It is difficult to understand why exactly these factors are not better reported. Reporting of sex and age is done simply in about 40 characters of text; so it’s not a space issue. Previous studies in both human and animal models concluded that males were studied more than females; our study contradicts these studies. This bias towards female mice may be because of practical factors: they are smaller – therefore they need less drug, inoculum, etc. to be administered); are less aggressive to each other and to experimenters, and are cheaper to house. Our study did have a large sample size and focused on only one model (mouse) and this may be a factor in why our study has different outcomes to others. Nevertheless, there appear to be biases in the choice of mouse sex to be used in experiments. The profound effects of sex on an organism’s biology has influenced the creation of the journal of Biology of Sex Differences. As sex influences so many aspects of biology one would suppose that balance for sex of mice used would be a good thing. In this regard, the NIH is engaging the scientific community to improve the sex balance in research. We have used some straight-forward text-analytics to undertake this study and it has enabled some very interesting questions to be asked and has highlighted some very interesting issues that may affect with what certainty we interpret results reported in papers and their broader applicability. It should be entirely possible to use text-analytics in a similar way for other experimental factors both pre- and post-publication.